Execution & Monitoring

Execution of a Dataflow

After creating a dataflow, you can integrate it into applications. You can also import it to any API testing tool and execute or test it.

Copying a Dataflow cURL

To copy the dataflow cURL,

-

Identify the Dataflow Scope:

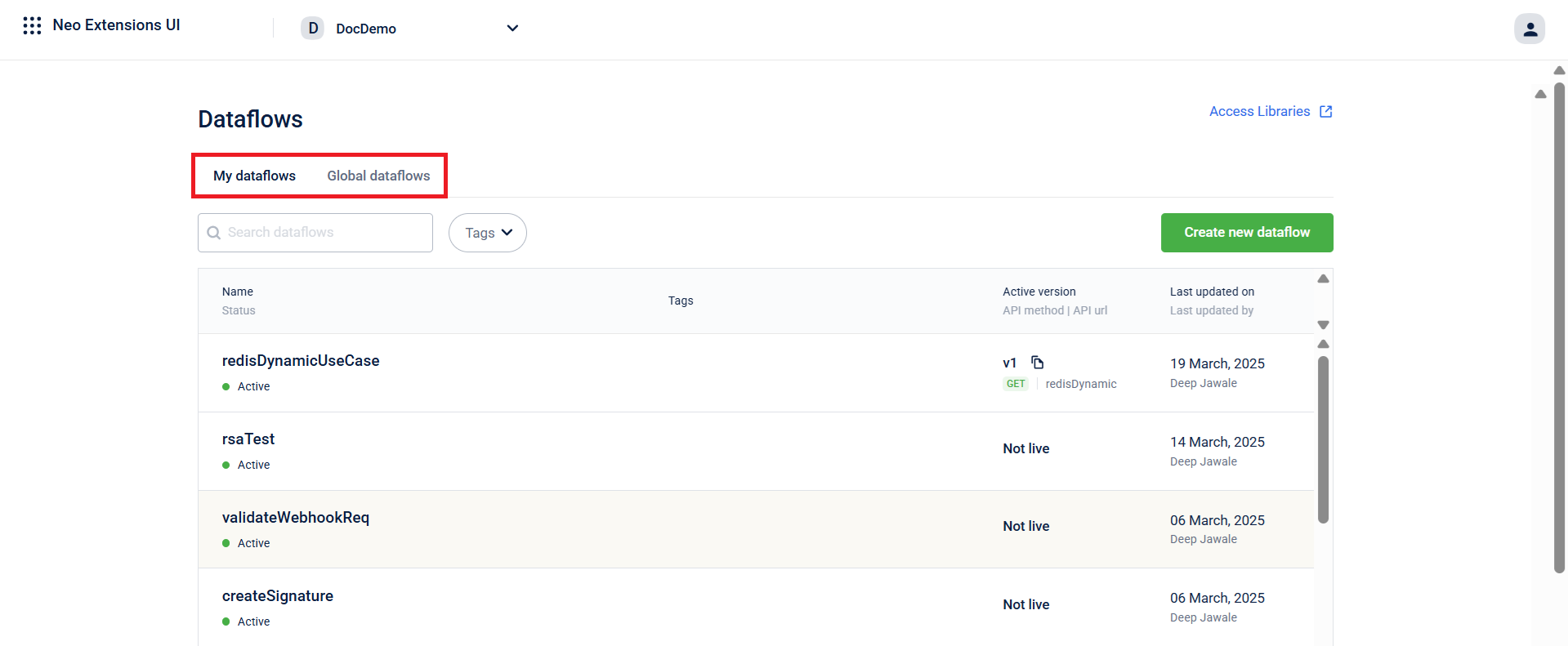

Go to the Dataflows page and select the tab based on the dataflow’s scope:-

My Dataflows → For dataflows within your organization.

-

Global Dataflows → For dataflows at the global level.

-

-

Select the Dataflow:

-

Click the dataflow you want to execute.

-

Choose the version you want to run.

-

Copy the cURL:

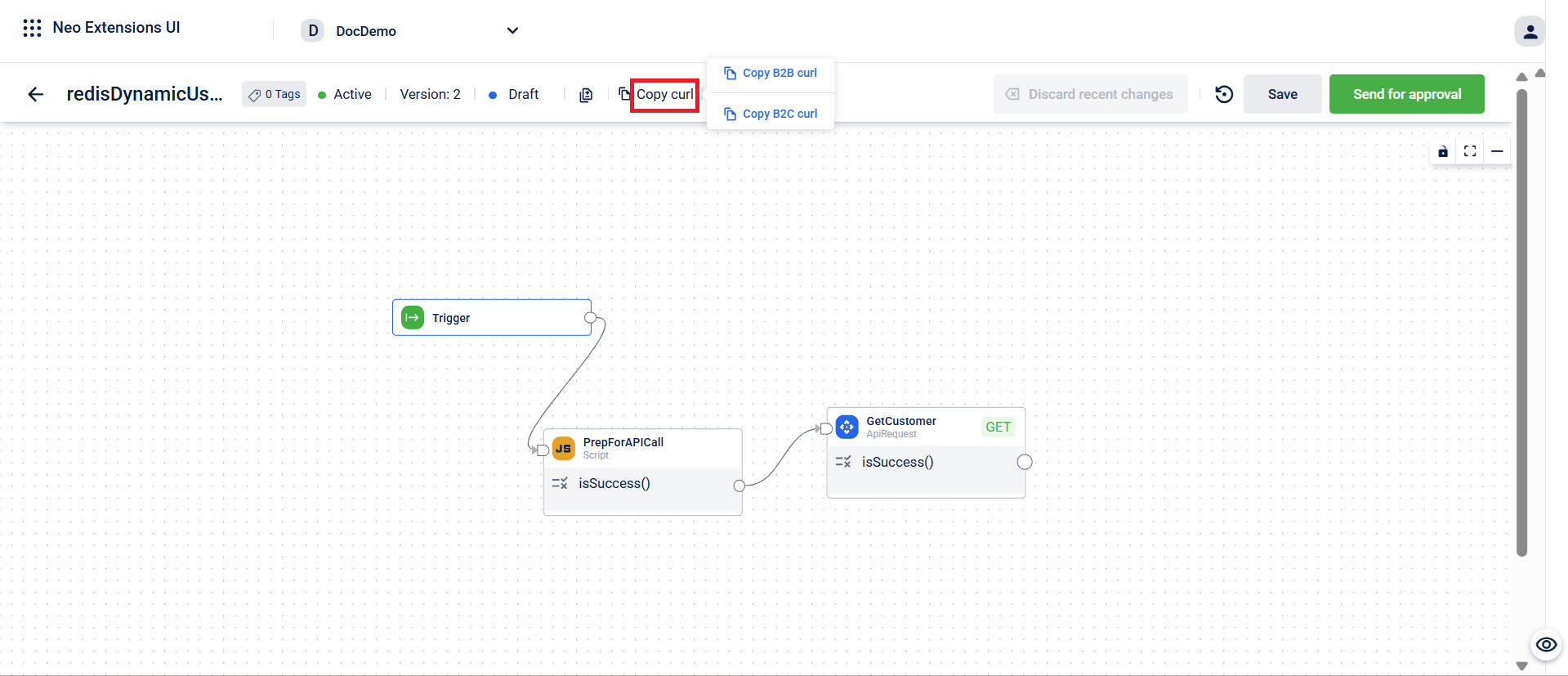

On the dataflow composition page, hover over Copy curl and click to copy the required cURL.-

B2B cURL → Use this when one system interacts with another (business to business).

-

B2C cURL → Use this for customer-facing interactions where the system communicates directly with end users.

Note- B2B cURL: Uses OAuth or Basic authorization.

- B2C cURL: Uses mobile OTPs or social logins for authorization.

-

Executing a Cross-Organizational Dataflow

Cross-organizational dataflows allow execution across different organizations.

Execution Scope

- Global Dataflows: Defined at the global organization level and accessible to all organizations.

- Parent Dataflows: Defined in a parent organization and accessible to both self and its child organizations. Applicable for Connected Organizations.

- Organization Dataflows: Defined in your organization and available only for your organization.

Note

- Global dataflows execute within the context of the calling organization, even though they are globally defined.

- Context Switching: Execution can switch from a parent organization to its child organization using a "context switch" block. However, execution cannot switch from a child organization to its parent.

- A parent organization cannot execute a dataflow from a child organization.

Testing a Cross-Organizational Dataflow

-

Import the cURL Command into an API testing tool.

-

Set the

x-cap-neo-dag-scopeHeader.global: Executes a dataflow from the global organization.parent: Executes a dataflow from the parent organization.org: Executes a dataflow from your organization.

-

Enter Body Parameters

- For

POSTandPUTmethods, provide the necessary request body parameters.

- For

-

Execute the Dataflow

- Run the cURL command from the API testing tool.

Observibility & Monitoring

You can view and analyze the performance of your Neo API, the APIs used in the Neo dataflow, view the logs, etc, using the Dev Console. The Dev Console helps in the monitoring and debugging process for the dataflow.

Updated 24 days ago